Dedicated to thoughts about software testing, QA, and other software quality related practices. I will also address software requirements, tools, standards, processes, and other essential aspects of the software quality equation.

Friday, May 28, 2010

The Surprising Truth About What Motivates Us

http://michaelhyatt.com/2010/05/the-surprising-truth-about-what-motivates-us.html

Have a great and safe weekend...and remember those who have served and died for our country!

Randy

Thursday, May 27, 2010

Webinar Links - Ellusive Tester to Developer Ratio

Thanks for tuning in to the webcast today.

Here are the links for the recorded session and chat transcript, along with other things I mentioned.

Recorded webinar

Chat transcript

Slides in PDF format

CTE-XL tool

Article - Ellusive Tester to Developer Ratio (The one I wrote back in 2000)

Thanks!

Randy

Sunday, May 23, 2010

May 2010 Newsletter Posted

Better late than never! The May issue of the Software Quality Advisor Newsletter is out:

http://riceconsulting.com/home/index.php/Newsletter-Past-Issues/may-2010-test-estimation-based-on-testware.html

You can get your copy each month to your e-mail account by signing up at:

http://riceconsulting.com/home/index.php/Newsletter/the-software-quality-advisor-newsletter-sign-up.html

Friday, May 21, 2010

Webinar - Thursday, May 27 - The Elusive Tester to Developer Ratio

To join the meeting, visit https://my.dimdim.com/ricecon on May 27 at 11:50 a.m., CDT. The webinar starts at 12:00 noon.

Wednesday, May 19, 2010

Book Review - Reflections on Management by Watts S. Humphrey with William R. Thomas

The Capability Maturity Model (CMM) and Capability Maturity Model Integrated (CMMI) have been major forces in software development for at least 20 years. Along with those, the Personal Software Process (PSP) and the Team Software Process (TSP) have also been applied to help make software projects more predictable and manageable.

This book is a collection of essays and articles written by Watts Humphrey, the man who was the influence and drive behind these models and processes. I found this book to be an interesting journey through the thinking of Humphrey as he clearly and rationally outlines the "why" behind the "what." Then, he describes "how" to do the work of managing intellectual and creative people which have to work together to deliver a technical product - on time, within budget, with the right features and with quality.

There are many gems in this very readable book (a great airplane book), such as:

- Defects are Not Bugs

- The Hardest Time to Make a Plan is When You Need it Most

- Everyone Loses With Incompetent Planning

- Every New Idea Starts as a Minority of One

- Projects Get into Trouble at the Very Beginning

- Managing Your Projects

- Managing Your Teams

- Managing Your Boss

- Managing Yourself

Although this book is a collection of essays, it flows very well and reads like it was written as one book. By the way, I felt the Epilogue was excellent - don't skip it.

If there are any doubts about the credibility factor of this book, the advance praise at the front of the book spans four pages and reads like a "who's who" of software development: Steve McConnell, Ed Yourdon, Ron Jeffries, Walker Royce, Capers Jones, Victor Basili, Lawrence Putnam and Bill Curtis, to name a few.

Whether you are fully immersed in the agile project world, or following the CMMI, or just trying to figure out the best way to plan, conduct and manage software projects, this is a book worth reading and taking to heart. In the advance praise, Ron Jeffries (www.XProgramming.com) writes, "I've followed Watts Humphrey's work for as long as I can remember. I recall, in my youth, thinking he was asking too much. Now that I'm suddenly about his age, I realize how many things he has gotten right. This collection from his most important writings should bring these ideas to the attention of a new audience: I urge them to listen better than I did."

Amen, Ron, amen.

Reviewed by Randy Rice

Disclosure of Material Connection: I received one or more of the products or services mentioned above for free in the hope that I would mention it on my blog. Regardless, I only recommend products or services I use personally and believe will be good for my readers. Some of the links in the post above are “affiliate links.” This means if you click on the link and purchase the item, I will receive an affiliate commission. Regardless, I only recommend products or services I use personally and believe will add value to my readers. I am disclosing this in accordance with the Federal Trade Commission’s 16 CFR, Part 255: “Guides Concerning the Use of Endorsements and Testimonials in Advertising.”

Test Estimation Based on Testware

However, there is a technique I have used over the years that plays on risk-based approaches. This technique can be applied to testware, such as test cases. Just remember this is not a scientific model, just an estimation technique.

What is Testware?

Testware is anything used in software testing. It can include test cases, test scripts, test data and other items.

The Problems with Test Cases

Test cases are tricky to use for estimation because:

They can represent a wide variety of strength, complexity and risk

They may be inconsistently defined across an organization

Unless you are good at measurement, you don’t know how much time or effort to estimate for a certain type of test case.

You can’t make an early estimate because you lack essential knowledge – the number of test cases, the details of the test cases and the functionality the test cases will be testing.

Dealing with Variations

“If you’ve seen one test case, you’ve seen them all.” Wrong. My experience is that test cases vary widely. However, there may be similarity between some cases, such as when test cases are logically toggled and combined.

A technique I have used to deal with test case variation is to score each test case based on complexity and risk, which are two driving factors for effort and priority.

The complexity rating is for the test case, not the item being tested. While the item’s complexity is important in assessing risk, we want to focus on the relative effort of performing the test case. You can assign a number between 1 and 10 for the complexity of a test case. It may be helpful to create criteria for this purpose. Here is an example, You can modify it for your own purposes.

1 – Very simple

2 – Simple

3 – Simple with multiple conditions (3 or less)

4 – Moderate with simple set-up

5 – Moderate with moderate set-up

6 – Moderate with moderate set-up and 3 or more conditions

7 – Moderate with complex set-up or evaluation, 3 or more conditions

8 – Complex with simple set-up, 3 or more conditions

9 – Complex with moderate set-up, 5 or more conditions

10 – Complex with complex set-up or evaluation, 7 or more conditions

This assessment doesn’t consider how the test case is described or documented, which can have an impact on how easy or hard a test case is to perform.

Assessing Risk

Risk assessment is both art and science. For estimation, you can be subjective. In fact, my experience is that risk assessment is subjective at some point or other.

This scale is based on the risk (impact) of the test case and its priority in the test. Like the complexity ranking, here are sample criteria you can adapt for your own situation:

1 – Lowest priority, lowest impact

2 – Low priority, low impact

3 – Low priority, moderate impact

4 – Moderate priority, moderate impact

5 – Moderate priority, moderate impact, may find important defects

6 – Moderate priority, high impact, has found important defects in the past

7 – High priority, moderate impact, new test

8 – High priority, high impact, may find high-value defects

9 – High priority, high impact, has found high-value defects in the past

10 – Highest priority, highest impact, must perform

Actually, the risk level could be seen from two perspectives - the risk of the item or function you are testing, or the risk of the test case itself. For example, if you fail to perform a test case that in the past has found defects, that could be seen as important enough to include every time you test. Not testing it would be a significant risk. The low risk cases would be those you could leave out and not worry about. Of course, there is a tie-in between these two views. The high-risk functions tend to have high-risk test cases. You could take either view of test case risk and be in the neighborhood for this level of test estimation.

Charting the Test Cases

To visualize how this technique works, we will look at how this could be plotted on a scatter chart. There are four quadrants:

1 – Low complexity, low risk

2 – High complexity, low risk

3 – Low complexity, high risk

4 – High complexity, high risk

Each test case will fall in one of the quadrants. One problem with the quadrant approach is that any test case in the center area of the chart could be seen as borderline. For example, in Figure 1, TC004 is in quadrant 4, but is also close to the other areas as well. So, it could actually be in quadrant 1 if the criteria are a little off.

Figure 1

For this reason, you may choose instead to divide the chart into nine sections. This “tic-tac-toe” approach gives more granularity. If a test case falls in the center of the chart, it is clearly in section 5 (Figure 2), which can have its own set of test estimation factors.

Figure 2

All You Need is a Spreadsheet

With many test cases, you would never want to go to the trouble of charting them all. All you need to know is in which section of the chart a test case resides.

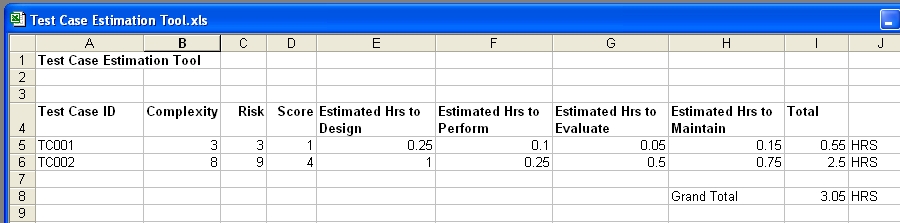

Once you know the complexity and risk scores, all you need to know are the sections on the chart. For example, if the complexity is 3 or less and the risk is 3 or less, the test case falls in section 1 of the nine-section chart. These rules can be written as formulas in a spreadsheet (Figure 3).

Figure 3

Sampling

So, what if you don’t have a good history of how long certain types of test cases take to perform? You can take samples from each sector of the chart.

Take a few test cases from each section, perform the test cases and measure how long it takes to set-up, perform and evaluate each test case.

You now extend your spreadsheet to include the average effort time for each test case (Figure 4).

Figure 4

Adjusting

Your estimate is probably inaccurate. There is a tendency to believe the more involved and defined the method is, the more accurate the estimate will be. However, the reality is that any method can be flawed. In fact, I have seen very elaborate estimation tools and methods which look impressive, but were inaccurate in practice.

It’s good to have some wiggle-room in an estimate as a reserve. Think of this factor as dial you can turn as your confidence in the estimate increases.

Conclusion

Like with any estimation technique, at the end of the day, there could be any number of things that could impact the accuracy of most estimates. Estimates based on test cases can be helpful once you have enough history of measuring them.

Sampling can be helpful if you have no past measurements, or if this is a new type of project for your organization. It is still a good idea to measure the actual test case performance times so you can incorporate them in your future estimates.

I hope this technique helps you and provides a springboard for your own estimation techniques.

Tuesday, May 18, 2010

New Test Automation Class a Success!

Thanks to everyone that participated in last week's presentation of my newest workshop, Practical Software Test Automation in Oklahoma City. The class went well, we had a great time together, and I learned some adjustments I need to make. Thanks to the Red Earth QA SIG for their sponsorship.

We also got some positive buzz from Marcus Tettmar, the maker of Macro Scheduler. Thanks, Marcus.

http://www.mjtnet.com/blog/2010/05/13/new-software-testing-course-featuring-macro-scheduler/

We use Macro Scheduler as the learning tool in the course for test automation and scripting. By the way, version 12 has just been released. I look forward to trying it!

http://www.mjtnet.com/blog/2010/05/17/macro-scheduler-12-is-shipping/

The next presentation will be next month in Rome. If you live in Italy, or have a desire to take a testing class in a great location, join me there on June 16 and 17. I will also be presenting the Innovative Software Testing Approaches workshop that week in Rome.

Innovative Software Testing Approaches

http://www.technologytransfer.eu/event/984/Innovative_Software_Testing_Approaches.html

Software Test Automation

http://www.technologytransfer.eu/event/985/Software_Test_Automation.html

If you are interesting in having this workshop in your city or company, just contact me!

Wednesday, May 12, 2010

The 900+ Point Drop in the Dow - A Real-Life Root Cause Analysis Challenge

The other day when the Dow dropped over 900 points, it was blamed on some trader someplace entering an order to sell "billions" instead of "millions" of P&G stock. To date, they still cannot produce the trade or the trader. Something doesn't smell right.

1. I would think that level of trade would require some sort of secondary approval.

2. Isn't there an audit trail of trades that would lead back to the trader?

3. If a billion dollar trade could do this, shouldn't there be an edit or at least warning message, "You have entered an amount in the billions. Click OK to bring down the entire global financial system."

This makes me question if this was really the case. Other possibilities could be:

1. A run on stocks that was truly panic selling and this was a way to explain it away without spooking everyone else in the country. I guess this is the "conspiracy theory" view.

2. A software defect...and maybe not a simple one. This could be one of those deeply-embedded ones. I know a little about how the Wall St. systems and processes work and believe me, this is not beyond the realm of possibility.

It may be impossible to know for certain. One would have to perform a deep dive root cause analysis, go through the change logs (if they exist), look at the exact version of code for everything going on at that time, look at a highly dynamic data stream...you get the idea. That probably won't happen. If someone does manage to isolate this as a software defect and can show it, I nominate them for the Root Cause Analysis Hall of Fame, located in Scranton, Pa. (Don't go looking for that...but there is a Tow Truck Museum in Chattanooga, TN.)

Just a random thought...

Tuesday, May 04, 2010

Great Customer Service in Action at Blue Bean Coffee

However, people know great service when they see it.

This morning I was having coffee with my pastor at Blue Bean coffee here in south Oklahoma City (SW 134 and Western). All of a sudden, the lady behind the counter ran out the door with a can of whipped cream in her hand. Turned out she had forgotten to top off the customer's drink. She said, "That would have bothered me all day."

Wow. That made an impression. Really, it's not that big a deal, but in today's "lack of service" culture it stands out.

Compare that to my experience a couple of weeks ago at Starbucks, not too far away. I go in with my own mug and ask for the free cup of coffee they were promoting for Earth Day. (This happened on Earth Day!) The barista looked confused and went in the back office to ask the manager. He came back out and said, "Sorry, that was last week." "OK," I said. "I guess I'll have a tall bold." To which he replied, "We only have a little left." "Is is fresh," I asked. "We change it every 30 minutes," he said. Now, you coffee drinkers out there know that a little coffee left on the heat for even a short time gets bitter, but I took my chances. Turned out, it was bad but I has already left. I complained to Starbucks online and got a good response back and two free drink coupons.

I was left wondering, though, how hard would it have been for the barista to simply say, "That promotion was last week, but here, have a cup anyway."? Perhaps he was not empowered to do that, or maybe he just wasn't thinking about the lifetime value of a customer.

Remember how Starbucks has tried all the other "ambiance" stuff - music, etc. I applaud the efforts at creating an experience, but to me the customer, I don't go to Starbucks for music. I go there for coffee and hope for decent service. Sometime they are friendly and sometimes they aren't. For the longest time all they served was Pike Place. Then, finally, someone in corporate woke up and decided to start offering different blends again.

All I can say is that I'll use those Starbucks coupons on the road and get my local coffee at Blue Bean. It's more personal, more friendly and they have better coffee. And that's what it's all about - the coffee and the service.

Keep that in mind as you serve people in IT. If you give great service and have a great product, people will find you irreplaceable and keep coming back for more. You will be more personal and more valuable.